Oracle presented it's BEA product integration strategy earlier this morning and following is my take on their approach. Their market message about Fusion, Applications and Database did not change - this was more about integrating the product stacks. On the whole this is good news for existing Oracle customers who have standardized on Applications and Fusion but not necessarily for the one's who standardized on BEA's stack (especially Portal and AquaLogic).

Following is how Oracle categorized the combined product stacks:

- Strategic Products: IMO these are the primary products where they shall continue investing and great news for those who have standardized on these products

- Continue and Converge Products: IMO - they will increase the support price and shall keep it going for the next 9 years. Customers who have standardized on these products WILL have to migrate to a different product within 9 years.

- Maintenance Products - EOL products

Oracle classified their products into following categories and shall comment on each of them:

Development tools:JDeveloper will be their primary development tool and for those who have invested in

IBM,

TIBCO,

SAP or other products - developers will need to develop composite solutions using two environments (JDeveloper and Eclipse). Yes! Oracle will still support Eclipse but not a level which IT organizations would prefer - especially for the one that have not standardized exclusively on Oracle.

One important point to note - they will no longer support

Beehive. Beehive are those proprietary controls from BEA that was put into open source. This is key for all development for WebLogic Portal and WebLogic Integration. If Beehive is EOL - this also means the EOL for WLP and WLI (as one cannot develop solutions without controls on these two products).

Application Server:This is great news for both BEA and Oracle customers - WebLogic will be the standard app server with Oracle's SCA runtime support. Plus - this also beefs up the WLS development team which was trimmed down substantially by BEA to create AquaLogic. In addition, their JRockit (JVM) provides a differentiation that other cannot match as yet. Both for near real-time performance as well as virtualization.

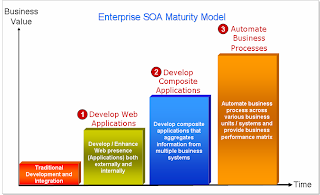

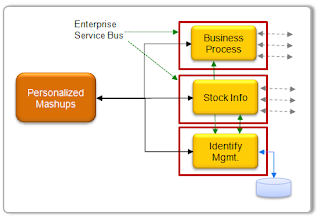

Services Oriented Architecture:Oracle Data Integrator will still be their primary tool for data integration. No mention about AqauLogic Data Services Platform (looks like it is EOL - without any formal announcement).

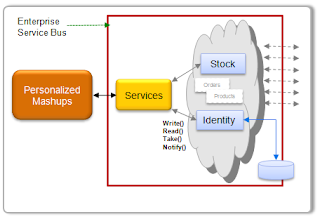

Convergence of AquaLogic Service Bus and Oracle's Service Bus into one single products. This is great, a ESB with excellent support for WS, JBI, SCA, etc. and ability to expand. IMO - this integration will take over 2 years and standardizing on either one will do for now.

The other Oracle products are great and are capabilities that were missing in the BEA stack - these products are complementary.

BEA WLI - continued support but no further development. Not very good news for the large Telecom and Financial Industry customers who have standardized on WLI. They will need to find an alternative - some other EAI or messaging platform like TIBCO, MQ,

webMethods, SAP XI are some alternatives. ActiveMQ (Open Source) may also be an options for those who leveraged WLI for messaging.

Business Process Mangement:It is great to see that Oracle has kept ALBPM - I was concerned they may not continue it for long. However, there is a lot of work to be done in ALBPM to integrate it with Oracle Web Center (especially as they are no longer going to continue with ALUI) for human work flow. This could leave the door open for competition (

TIBCO,

Vitria,

SAP,

IBM or

Microsoft) to walk away with their user interaction business. In addition, ALBPMs will also need to fortify it's support for BPEL as well as transaction management. With additional resources and expertise from Oracle - ALBPM is expected to continue being the market leader.

Overall - this is a step in the right direction for Oracle.

Enterprise 2.0 Portal:In my opinion - this is where Oracle got it wrong. A lot of large customers have standardized on WLP and/or ALUI. With Oracle putting this in maintenance mode - will force ALL BEA customers to migrate to another platform in the next five years. Most customers shall stop new development on BEA Protal products which leaves the door open for their competitiors. In addition, with loosing WLP - they just lost integration with other Content Management Solutions like

Documentum,

Interwoven,

Filenet, etc. Agreed they still have their own content management solution (which they will have tight integration with) and maybe their intention is to sell the entire stack to the customer.

Customers who have standardized on Oracle apps will most probably go with Oracle Web Center and those who have standardized on SAP applications will most probably standardize on SAP Portal. As for the rest of the customers - it is open season. The race will still be between IBM, Oracle, Microsoft and Open Source (Web 2.0 products).

Good to see that Ensemble and Pathways are still primary products for Oracle.

Identity Management:Now they do have a complete stack and am still not sure whether customers will standardize on Oracle's stack. Agreed they may be one of the market leaders for security but personally I may still prefer a combination of CA and/or RSA products.

System Management:Again now they have a complete story but not market leaders in any of their products. Guess Oracle may have to acquire

BMC to complete the stack :). Alternately, they could just go after

CA and get both management and Security at the same time :).

SOA Governance:Gald to see that they kept ALER intact. Not sure why they are still OEM the Service Registry, especially as all they need to do is provide UDDI v3 support on ALER and they could own the entire stack.

One area that Oracle will have to address over the next year or two and that is around IT Governance / Application Portfolio Management. Currently ALER does integrate with CA and HP IT Governance tools - however, at sometime Oracle will want to own this application too (another acquition :) ).

Service Delivery Platform:This is very good news for the telecommunication industry. The combined portfolio is very attractive and something that will get the industry excited about this. The biggest challenge that they most probably will face is the lack of qualified resources in the market. However, knowing Oracle - they will overcome this problem by aggressive training and education programs.

Over all - this is good for Oracle and for most of the customers. However, it would challenging for those BEA customers who had standardized on WLP and/or ALUI (Plumtree).

Disclosure: I was employed by Oracle between 1996 and 2000 and by BEA between 2002 and 2007. I am currently and independent consultant and am neither on a contract or payroll of any of their competitors. This is purely my opinion based on my experience in the industry.You comments are always welcome and do feel free to drop me

line.

Yogish Pai