First the problem statement - Typically the Line of Business (LoB) owned business processes and IT owned data/ information aspects. This would then help explain why these two key aspects were never in synch. The industry is now realizing the need for alignment between the process and the informational aspects and has created a new discipline of “Business Architecture” to encompass business process and business information.

Here the idea would be to leverage the business information flow in an optimal manner to drive business process definition, business process engineering and business process optimizations as opposed to shoe-horning this crucial business information into the business process. It must be noted that business information can take forms such as rules to transform business data, business decisions made in the context of an exception in the process, regulatory influences on a process or short-circuiting rules that enable a process to either be aborted without a detrimental effect on the process or rules for enabling a process to be completed quickly in “special business situations”. It is the access to this contextual business information that makes business process automation possible without losing the knowledge base of the subject matter experts.

A key goal of Business Architecture is to bring about the efficiencies of business process by access the right information. As was mentioned earlier, business architecture allows sharing of business information that is an enterprise asset in the context of a business process that is a LoB asset. Business architecture also helps highlight lost opportunities by drawing up scenarios where lack of business information availability (due to "not invented here complex" or lack of proper stewardship) prevents business process efficiencies from being realized. In addition, business process without the decision influencing contextual information still leaves the LoB user to make one off decisions that may be either based on invalid data or insufficient data and leads to process execution inconsistencies.

Furthermore, in an exceedingly inter-related enterprise or even extended enterprise sub-optimal decisions made in any business process leads contradictions in the rest of the enterprise. From an upstream process perspective, these lead to business strategies being interpreted erroneously. From a downstream perspective the business events being emitted by the siloed business process may have insufficient or improper information for execution leading to more exceptions in the downstream steps of the process. This may , slow down the entire business process chain having a negative impact on the business.

Another key goal of Business Architecture is to insure that the business information captured as part of Business Process Optimization efforts is consistent in its’ reporting. Here business process decisions made prior to the process reengineering efforts and after have to be captured consistently and accurately. This base line allows the study of the process efficiencies to be quantified. If the business information that is emitted in the form of business process based events is not being made available beyond the LoB process boundary then again sub-optimal process execution in the downstream steps could overturn the effects of any optimization work.

Information has to be captured consistently, emitted in a timely fashion and finally the events have be interpreted accurately to insure that the business strategy and the optimizations that are being envisioned by the business in implementing the value chain activities are in fact resulting in competitive advantage. This knowledge enables further process improvements and makes it easy to deal with business process adjustments especially when enterprise is faced with making a dramatic shift to deal with changing market conditions or regulatory conditions.

Finally, business process management, business process optimization and business activity monitoring need access to a well thought out MDM philosophy and strategic analytical marts that can be accessed via informational business services. These type of information access services can combine real time operational BI, real time business events and analytical sources to "close the informational loop"!!! Please see my whitepaper on this topic as well - Closing the Loop: Using SOA to Automate Human Interaction!!

As always thank you for your feedback.

surekha -

Practitioners observations and view on the best practices, key learning on the fast changing landscape of technology and architecture. - Strategic User of Information Technology - Cloud Computing - Big Data

Sunday, August 31, 2008

Friday, August 29, 2008

Architecture tenets of High Cohesion and Loose Coupling

Architecture tenets of High Cohesion and Loose Coupling – Both of these tenets are related to one construct i.e. that of a “Contract”.

The term contract in information technology involves the definition of high level interfaces in the form of a coarse-grained set of operations that have well known inputs, output, clear exceptions or faults. The contract hides all of the details of implementation and allows these hidden implementation details to behave as one cohesive unit - in that it provides support for "high cohesion". By extension, in separating the client or consumer or caller of the contract from the implementation details it provides support for “loose coupling”.

This concept of contract works at any of the following levels:

1. sub-system interface (for example, a persistence sub-system)

2. component interface (for example, a remote monitor)

3. layers of architecture (for example, business layer vs. presentation layer)

4. infrastructure service (for example, a messaging service)

5. SOA style business service (an customer account self-service)

Furthermore, the concept works whether the implementation is a local call or a remote component call long as the "contract" is honored. Experienced architects also insist on unidirectional contract-based communication even between the layers of the architecture - with communication only being allowed to the very next layer down. The concept is that the more volatile layers interact with the more stable layers’ contract without skipping levels. This level of indirection adds as a check for the entire system as the volatility of top level layers and the communications from the volatile layers’ are limited to the very adjacent layer alone without affecting multiple aspects of the system when these layers change.

This concept is the key driver of the Model View Controller or MVC pattern wherein the presentation layer is allowed to talk to the interface or contract of the controller layer or the façade layer alone but not to the interface in the business logic layer or the data logic layer. Also, the façade layer or the controller layer is not allowed to communicate to the client or the presentation layer. The contract dictates that it is the client or the presentation layer that is responsible for initiating the communication.

Advantages of adhering to the Contract:

A) Implementation details can change without negatively affecting the consumer or the client. Loose coupling facilitated by the contract protects the client or consumer. Also, since the behavior is highly cohesive (all hidden behind the contract in one well-knit codebase) any alterations to business rules or behavioral logic is embedded in this codebase, thus insuring the completeness of apply the rule/ logic change. Without this it is very possible that part of the logic is embedded in consumer codebase and part of it may also be placed in the communication or the mediation layer (leading to the anti-pattern low cohesion and high coupling).

B) System integration testing and performance optimizations are easier when there is a known finite set of operations, inputs and outputs that will be allowed by the contract.

C) Understanding the interactions and invocations for the consumer becomes easier due to the known and pre-configured set of operations that are published on the contract. This helps to make the system or application more deterministic.

D) As long as the contract is not broken in making behavioral enhancements new consumers can be entertained without having to create newer versions of the codebase. Of course, this can also mean addition of newer audit and tracking capabilities in compliance with internal or regulatory policies without affecting or "informing" the consumer.

E) Since the consumer is not interacting with multiple internal points of the codebase, the system interactions and resource utilizations per consumer/ client call are more quantifiable and predictable. This makes it easy to scale the system for availability. This contract or interface then becomes the single point of entry for all interactions and is thus the only point that needs to be monitored to assess system resource utilizations. In addition, provisioning of system resources becomes more scientific as all calls of a certain operation take a known amount of time and resources given that the inputs are also quantifiable.

As can be seen, a simple construct such as “Design by Contract” when taken seriously and to its logical conclusion renders a great deal of architectural stability, robustness and extensibility.

As always your comments are welcome.

surekha -

The term contract in information technology involves the definition of high level interfaces in the form of a coarse-grained set of operations that have well known inputs, output, clear exceptions or faults. The contract hides all of the details of implementation and allows these hidden implementation details to behave as one cohesive unit - in that it provides support for "high cohesion". By extension, in separating the client or consumer or caller of the contract from the implementation details it provides support for “loose coupling”.

This concept of contract works at any of the following levels:

1. sub-system interface (for example, a persistence sub-system)

2. component interface (for example, a remote monitor)

3. layers of architecture (for example, business layer vs. presentation layer)

4. infrastructure service (for example, a messaging service)

5. SOA style business service (an customer account self-service)

Furthermore, the concept works whether the implementation is a local call or a remote component call long as the "contract" is honored. Experienced architects also insist on unidirectional contract-based communication even between the layers of the architecture - with communication only being allowed to the very next layer down. The concept is that the more volatile layers interact with the more stable layers’ contract without skipping levels. This level of indirection adds as a check for the entire system as the volatility of top level layers and the communications from the volatile layers’ are limited to the very adjacent layer alone without affecting multiple aspects of the system when these layers change.

This concept is the key driver of the Model View Controller or MVC pattern wherein the presentation layer is allowed to talk to the interface or contract of the controller layer or the façade layer alone but not to the interface in the business logic layer or the data logic layer. Also, the façade layer or the controller layer is not allowed to communicate to the client or the presentation layer. The contract dictates that it is the client or the presentation layer that is responsible for initiating the communication.

Advantages of adhering to the Contract:

A) Implementation details can change without negatively affecting the consumer or the client. Loose coupling facilitated by the contract protects the client or consumer. Also, since the behavior is highly cohesive (all hidden behind the contract in one well-knit codebase) any alterations to business rules or behavioral logic is embedded in this codebase, thus insuring the completeness of apply the rule/ logic change. Without this it is very possible that part of the logic is embedded in consumer codebase and part of it may also be placed in the communication or the mediation layer (leading to the anti-pattern low cohesion and high coupling).

B) System integration testing and performance optimizations are easier when there is a known finite set of operations, inputs and outputs that will be allowed by the contract.

C) Understanding the interactions and invocations for the consumer becomes easier due to the known and pre-configured set of operations that are published on the contract. This helps to make the system or application more deterministic.

D) As long as the contract is not broken in making behavioral enhancements new consumers can be entertained without having to create newer versions of the codebase. Of course, this can also mean addition of newer audit and tracking capabilities in compliance with internal or regulatory policies without affecting or "informing" the consumer.

E) Since the consumer is not interacting with multiple internal points of the codebase, the system interactions and resource utilizations per consumer/ client call are more quantifiable and predictable. This makes it easy to scale the system for availability. This contract or interface then becomes the single point of entry for all interactions and is thus the only point that needs to be monitored to assess system resource utilizations. In addition, provisioning of system resources becomes more scientific as all calls of a certain operation take a known amount of time and resources given that the inputs are also quantifiable.

As can be seen, a simple construct such as “Design by Contract” when taken seriously and to its logical conclusion renders a great deal of architectural stability, robustness and extensibility.

As always your comments are welcome.

surekha -

Monday, August 11, 2008

Organizational Issues with SOA

One of the barriers to full realization of SOA potential is shortage of critical skill sets needed to successfully implement SOA initiatives. Let's be honest about the reality of the situation. Development teams typically are made up of outsourcing partners, temporary consultants, and employees. They all have varying degrees of training, skills and motivations when it comes to delivering a solution. These teams are responsible for carrying out the vision, approaches and processes laid out by the EA team. In general, the EA teams do a good job of laying out the target architecture, governance processes, best practice etc. However, the developer community is generally focused on getting things working in the shortest time possible with little regard to making sure the services have the right level of de-coupling and are designed and developed correctly for future re-use.

Having a strong governance structure can help relying too much on governance leads to a situation where the governance body itself becomes more of a micromanager than an oversight entity.

In my opinion, the right team structure is when at least a few key members (preferably in permanent capacity) have the leadership and communication skills and have full understanding and appreciation of SOA. These members can act as mentor and provide the necessary oversight to make sure services are delivering on the promise of business agility.

Ashok Kumar

Having a strong governance structure can help relying too much on governance leads to a situation where the governance body itself becomes more of a micromanager than an oversight entity.

In my opinion, the right team structure is when at least a few key members (preferably in permanent capacity) have the leadership and communication skills and have full understanding and appreciation of SOA. These members can act as mentor and provide the necessary oversight to make sure services are delivering on the promise of business agility.

Ashok Kumar

Thursday, August 07, 2008

Best Practices: Master Data Management

Following are some of the best practices for adopting (note - not implementing) Master Data Management solutions within an Enterprise.

Understand the Business Context (semantics) prior to picking a solution

As per my earlier blog on EA, BPM, SOA and MDM it is very important to understand the business context, including the semantics of what each of business units, departments and entities mean when they refer to MDM entities such as Customers or Products. For example marketing deals with leads, sales with opportunities/accounts and services with paid customers. Should all the entity be referred to as customers throughout the business process? or should the master entity be referred to as Organization? Common vocabulary goes hand-in-hand with master data. For example what does this sign on a building mean? Is the building fully occupied and available for purchase? or does it mean that the entire building is unoccupied and available for rent? The business context needs to be clearly defined in terms of business units, departments and business processes.

For example what does this sign on a building mean? Is the building fully occupied and available for purchase? or does it mean that the entire building is unoccupied and available for rent? The business context needs to be clearly defined in terms of business units, departments and business processes.

Develop a comprehensive entity model.

Not only should one define the common attributes for the master data, one should map the entire set of attributes and where it is used. For example, in customer services the customer is used as a reference whereas in order management it is transactional data.

A set of models and documents that can be used both by Business and IT.

A set of models and documents that can be used both by Business and IT.

Establish data governance process right up front.

It is important to establish data governance process right upfront, especially as this may required dedicated resources from all the business units as well as from IT to manage the master data. The approach that worked for us is as follows:

It is important for enterprises to consider bringing in 3rd party data providers such as D&B and Factiva for better understanding of the market. For example on a typical morning between 9:00am and 11:00am

3rd party data from D&B could be used for leveraging legal name as the name of the organization, providing knowledge management systems that also provide news feeds as well as market statistics by industry, geography and demography and also mapping it to existing sales. Picking the right data standardization and matching engine:

Picking the right data standardization and matching engine:

This is a key technology that will either make or break the quality of your data. I agree that developing the business rules and configuring the matching tool is more important - however from a technology point of view - I would dedicate substantial amount of time evaluating and testing the tools with existing data before picking a technology. My preference would be to use one of the following matching engines:

Trillium, First Logic (now SAP), IBM or SAS.

In cases where a MDM product is packed with a different matching engine, I would be tempted to externalize the data quality to one of the above mentioned data matching engines. Just my preference - maybe the other quality engines may have got better. Do you own evaluation.

Expose all MDM functionality as services

Expose all functionality such as data (address) standardization, data matching, update master and propagate master key.

As usual please do feel free to drop me line with your comments and/or feedback.

- Yogish

Understand the Business Context (semantics) prior to picking a solution

As per my earlier blog on EA, BPM, SOA and MDM it is very important to understand the business context, including the semantics of what each of business units, departments and entities mean when they refer to MDM entities such as Customers or Products. For example marketing deals with leads, sales with opportunities/accounts and services with paid customers. Should all the entity be referred to as customers throughout the business process? or should the master entity be referred to as Organization? Common vocabulary goes hand-in-hand with master data.

For example what does this sign on a building mean? Is the building fully occupied and available for purchase? or does it mean that the entire building is unoccupied and available for rent? The business context needs to be clearly defined in terms of business units, departments and business processes.

For example what does this sign on a building mean? Is the building fully occupied and available for purchase? or does it mean that the entire building is unoccupied and available for rent? The business context needs to be clearly defined in terms of business units, departments and business processes.Develop a comprehensive entity model.

Not only should one define the common attributes for the master data, one should map the entire set of attributes and where it is used. For example, in customer services the customer is used as a reference whereas in order management it is transactional data.

A set of models and documents that can be used both by Business and IT.

A set of models and documents that can be used both by Business and IT.Establish data governance process right up front.

It is important to establish data governance process right upfront, especially as this may required dedicated resources from all the business units as well as from IT to manage the master data. The approach that worked for us is as follows:

- Executive Leadership Team Data Leadership Team that meets on a periodic basis (once a month) to establish data policies, standards, establish priorities for the data quality team

- Data Stewardship (Quality) Team are the business operations people who manage the data quality on a day to day basis. The business team enforces and ensures data quality across the enterprise.

- Enterprise Data Program team is responsible for developing and managing the programs and business rules in the various technology tools.

It is important for enterprises to consider bringing in 3rd party data providers such as D&B and Factiva for better understanding of the market. For example on a typical morning between 9:00am and 11:00am

- 706 businesses will move

- 578 businesses will change their phone numbers

- 60 businesses will change their name

- 49 businesses will shut down

- 10 businesses will file bankruptcy

- 1 business with change ownership

3rd party data from D&B could be used for leveraging legal name as the name of the organization, providing knowledge management systems that also provide news feeds as well as market statistics by industry, geography and demography and also mapping it to existing sales.

Picking the right data standardization and matching engine:

Picking the right data standardization and matching engine:This is a key technology that will either make or break the quality of your data. I agree that developing the business rules and configuring the matching tool is more important - however from a technology point of view - I would dedicate substantial amount of time evaluating and testing the tools with existing data before picking a technology. My preference would be to use one of the following matching engines:

Trillium, First Logic (now SAP), IBM or SAS.

In cases where a MDM product is packed with a different matching engine, I would be tempted to externalize the data quality to one of the above mentioned data matching engines. Just my preference - maybe the other quality engines may have got better. Do you own evaluation.

Expose all MDM functionality as services

Expose all functionality such as data (address) standardization, data matching, update master and propagate master key.

As usual please do feel free to drop me line with your comments and/or feedback.

- Yogish

Saturday, August 02, 2008

Enterprise Architecture, BPM, SOA and Master Data Management (MDM)

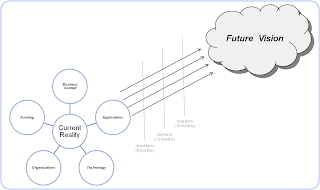

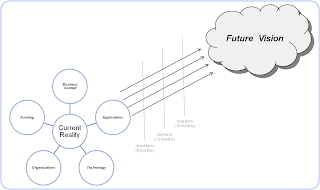

One of the best practices for Enterprise Architecture teams to redo the enterprise road map on a periodic basis. It is typically reviewed and updated during the yearly budgeting cycle and my preference is to perform this activity every 18 months. The best practices (and the traditional approach) is to first document the as-is, next develop the target or future state (architecture) and finally develop a short term (6 months), mid term (12 months) and long term (18 months) road map. Preferable an actionable road map that ties back to the business initiatives.

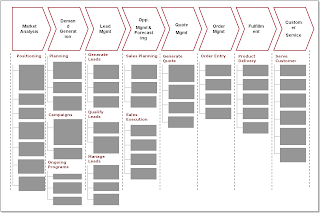

It is good to document the the as-is (or current reality) from all the domains such as Business Context, Applications, Technology, organization and Funding. Typically the business context is best understood by identifying and mapping the key business processes at a high-level.

It is good to document the the as-is (or current reality) from all the domains such as Business Context, Applications, Technology, organization and Funding. Typically the business context is best understood by identifying and mapping the key business processes at a high-level.

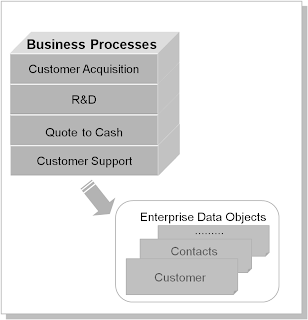

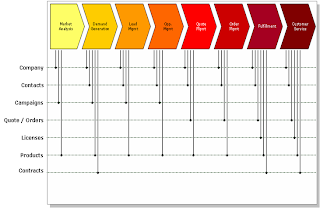

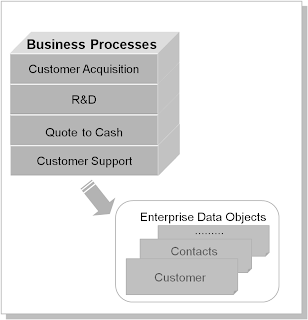

This approach not only helps have a common vocabulary between business and IT by identifying the key business processes, it also helps identify the key enterprise data objects (entities) such as Customers, Contacts, Products and orders. Based on the priorities of the each of the business process, the next steps would be to drill down into one or all the business processes as illustrated below.

This approach not only helps have a common vocabulary between business and IT by identifying the key business processes, it also helps identify the key enterprise data objects (entities) such as Customers, Contacts, Products and orders. Based on the priorities of the each of the business process, the next steps would be to drill down into one or all the business processes as illustrated below.

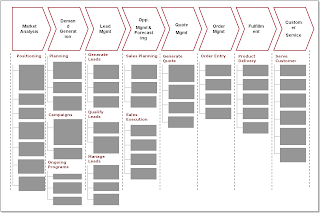

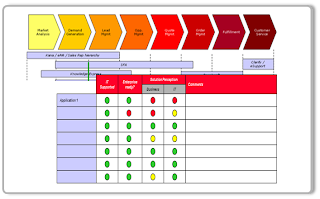

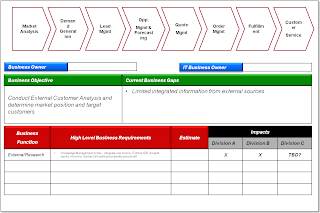

Once again, it is not necessary to use a Business Process Modeling tool (however, using one would be helpful later), the objectives is to clearly identify and document the next level of details. The next steps are to perform the gap analysis on each of the activities as illustrated below.

Once again, it is not necessary to use a Business Process Modeling tool (however, using one would be helpful later), the objectives is to clearly identify and document the next level of details. The next steps are to perform the gap analysis on each of the activities as illustrated below.

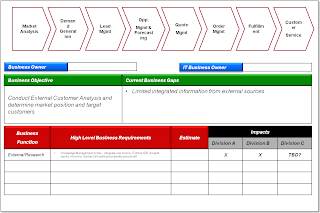

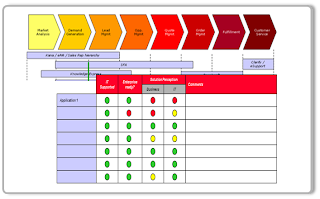

This approach enables both business and IT clearly visualize the existing gaps, impact areas and cost estimates which helps in developing the priorities and the investment plan. In addition, it is also important to identify and illustrate the list of applications/solutions that support a given business process as well as it perception within Business and IT as illustrated below.

This approach enables both business and IT clearly visualize the existing gaps, impact areas and cost estimates which helps in developing the priorities and the investment plan. In addition, it is also important to identify and illustrate the list of applications/solutions that support a given business process as well as it perception within Business and IT as illustrated below.

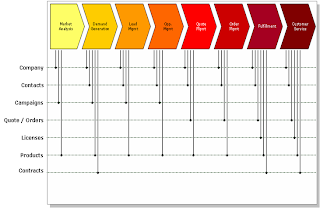

As we develop the actionable road map to the future state, one this is obvious, no matter what we implement or adopt, whether it is a packaged applications, BPM or SOA key enterprise data crosses the silos both from an organization and the applications. It is for this reason, there is a critical need for adoption Master Data Management across the enterprises.

As we develop the actionable road map to the future state, one this is obvious, no matter what we implement or adopt, whether it is a packaged applications, BPM or SOA key enterprise data crosses the silos both from an organization and the applications. It is for this reason, there is a critical need for adoption Master Data Management across the enterprises.

It is very important to spend sometime understanding and mapping these enterprise data objects in the context of the business process. It would also be very helpful to also develop a high-level data model and transaction (CRUD) matrix associated with the business process before initiating the activity of selecting/implementing an MDM solution.

It is very important to spend sometime understanding and mapping these enterprise data objects in the context of the business process. It would also be very helpful to also develop a high-level data model and transaction (CRUD) matrix associated with the business process before initiating the activity of selecting/implementing an MDM solution.

I have seen a lot examples where companies have embarked on an MDM project without developing the architecture (see my blog on Blueprinting Information Architecture for more details) and not meeting the desired business outcome. The two other primary reasons of MDM failures are:

As usual please do feel free to drop me line with your comments and/or feedback.

- Yogish

It is good to document the the as-is (or current reality) from all the domains such as Business Context, Applications, Technology, organization and Funding. Typically the business context is best understood by identifying and mapping the key business processes at a high-level.

It is good to document the the as-is (or current reality) from all the domains such as Business Context, Applications, Technology, organization and Funding. Typically the business context is best understood by identifying and mapping the key business processes at a high-level. This approach not only helps have a common vocabulary between business and IT by identifying the key business processes, it also helps identify the key enterprise data objects (entities) such as Customers, Contacts, Products and orders. Based on the priorities of the each of the business process, the next steps would be to drill down into one or all the business processes as illustrated below.

This approach not only helps have a common vocabulary between business and IT by identifying the key business processes, it also helps identify the key enterprise data objects (entities) such as Customers, Contacts, Products and orders. Based on the priorities of the each of the business process, the next steps would be to drill down into one or all the business processes as illustrated below. Once again, it is not necessary to use a Business Process Modeling tool (however, using one would be helpful later), the objectives is to clearly identify and document the next level of details. The next steps are to perform the gap analysis on each of the activities as illustrated below.

Once again, it is not necessary to use a Business Process Modeling tool (however, using one would be helpful later), the objectives is to clearly identify and document the next level of details. The next steps are to perform the gap analysis on each of the activities as illustrated below. This approach enables both business and IT clearly visualize the existing gaps, impact areas and cost estimates which helps in developing the priorities and the investment plan. In addition, it is also important to identify and illustrate the list of applications/solutions that support a given business process as well as it perception within Business and IT as illustrated below.

This approach enables both business and IT clearly visualize the existing gaps, impact areas and cost estimates which helps in developing the priorities and the investment plan. In addition, it is also important to identify and illustrate the list of applications/solutions that support a given business process as well as it perception within Business and IT as illustrated below. As we develop the actionable road map to the future state, one this is obvious, no matter what we implement or adopt, whether it is a packaged applications, BPM or SOA key enterprise data crosses the silos both from an organization and the applications. It is for this reason, there is a critical need for adoption Master Data Management across the enterprises.

As we develop the actionable road map to the future state, one this is obvious, no matter what we implement or adopt, whether it is a packaged applications, BPM or SOA key enterprise data crosses the silos both from an organization and the applications. It is for this reason, there is a critical need for adoption Master Data Management across the enterprises. It is very important to spend sometime understanding and mapping these enterprise data objects in the context of the business process. It would also be very helpful to also develop a high-level data model and transaction (CRUD) matrix associated with the business process before initiating the activity of selecting/implementing an MDM solution.

It is very important to spend sometime understanding and mapping these enterprise data objects in the context of the business process. It would also be very helpful to also develop a high-level data model and transaction (CRUD) matrix associated with the business process before initiating the activity of selecting/implementing an MDM solution.I have seen a lot examples where companies have embarked on an MDM project without developing the architecture (see my blog on Blueprinting Information Architecture for more details) and not meeting the desired business outcome. The two other primary reasons of MDM failures are:

- Lack of developing the data governance model up front that involves all the impacted business units

- Assuming that a packaged applications could be modified to be the master data for enterprise.

As usual please do feel free to drop me line with your comments and/or feedback.

- Yogish

Subscribe to:

Comments (Atom)

Key Learnings - Using EDA to implement the core SOA principle of "loose-coupling"!!!

A lot has been said about how SOA and EDA are unique "architecture styles". It seems like only one or the other architectural prin...

-

A lot has been said about how SOA and EDA are unique "architecture styles". It seems like only one or the other architectural prin...

-

The purpose of this blog is to get some validation for how I look at Business Processes vs. Business Services. In simple terms, I differen...

-

Looks like there is a contant need to educate the industry on SOA and this time I shall take a stab at what SOA is not.... SOA is not about ...